Beginners Guide to Oracle Clusterware Architecture and Cluster Services

Oracle Clusterware provides a complete set of cluster services to support the shared disk, load balancing cluster architecture of the Oracle Real Application Cluster (RAC) database. Oracle Clusterware can also be used to provide failover clustering services for single-instance Oracle databases and other applications. The services provided by Oracle Clusterware include:

- Cluster management, which allows cluster services and application resources to be monitored and managed from any node in the cluster

- Node monitoring, which provides real-time information regarding which nodes are currently available and the resources they support. Cluster integrity is also protected by evicting or fencing unresponsive nodes.

- Event services, which publish cluster events so that applications are aware of changes in the cluster

- Time synchronization, which synchronizes the time on all nodes of the cluster

- Network management, which provisions and manages Virtual IP (VIP) addresses that are associated with cluster nodes or application resources to provide a consistent network identity regardless of which nodes are available. In addition, Grid Naming Service (GNS) manages network naming within the cluster.

- High availability, which services, monitors, and restarts all other resources as required

- Cluster Interconnect Link Aggregation (HAIP)

Features of Oracle Clusterware

Oracle Clusterware has become the required clusterware for Oracle Real Application Clusters (RAC). Oracle Database 12c builds on the tight integration between Oracle Clusterware and RAC by extending the integration with Automatic Storage Management (ASM). The result is that now all the shared data in your cluster can be managed by using ASM. This includes the shared data required to run Oracle Clusterware, Oracle RAC, and any other applications you choose to deploy in your cluster.

In most cases, this capability removes the need to deploy additional clusterware from other sources, which also removes the potential for integration issues caused by running multiple clusterware software stacks. It also improves the overall manageability of the cluster.

Features include but not limited to:

- Easy installation

- Easy management

- Continuing tight integration with Oracle RAC

- ASM enhancements with benefits for all applications

- No additional clusterware required

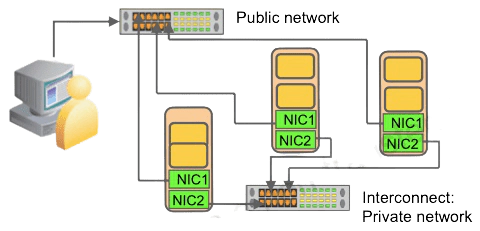

Oracle Clusterware Networking

Each node must have at least two network adapters: one for the public network interface and the other for the private network interface or interconnect. In addition, the interface names associated with the network adapters for each network must be the same on all nodes. For example, in a two-node cluster, you cannot configure network adapters on node1 with eth0 as the public interface, but on node2 have eth1 as the public interface. Public interface names must be the same, so you must configure eth0 as public on both nodes. You should configure the private interfaces on the same network adapters as well. If eth1 is the private interface for node1, eth1 should be the private interface for node2.

Before starting the installation, on each node, you must have at least two interfaces to configure for the public and private IP addresses. You can configure IP addresses with one of the following options:

- Oracle Grid Naming Service (GNS) using one static address defined during installation, which dynamically allocates VIP addresses using Dynamic Host Configuration Protocol (DHCP), which must be running on the network. You must select the Advanced Oracle Clusterware installation option to use GNS.

- Static addresses that network administrators assign on a network domain name server (DNS) or each node. To use the Typical Oracle Clusterware installation option, you must use static addresses.

For the public network, each network adapter must support TCP/IP. For the private network, the interconnect must support UDP or RDS (TCP for Windows) for communications to the database. Grid Interprocess Communication (GIPc) is used for Grid (Clusterware) interprocess communication. GIPc is a common communications infrastructure to replace CLSC/NS. It provides full control of the communications stack from the operating system up to whatever client library uses it. The dependency on network services (NS) before 11.2 is removed, but there is still backward compatibility with existing CLSC clients (primarily from 11.1). GIPc can support multiple communications types: CLSC, TCP, UDP, IPC, and of course, the communication type GIPc.

Use high-speed network adapters for the interconnects and switches that support TCP/IP. Gigabit Ethernet or an equivalent is recommended. If you have multiple available network interfaces, Oracle recommends that you use the Redundant Interconnect Usage feature to make use of multiple interfaces for the private network. However, you can also use third-party technologies to provide redundancy for the private network.

Oracle Clusterware Initialization

Oracle Linux 6 (OL6) or Red Hat Linux 6 (RHEL6) has deprecated inittab, rather, init.ohasd will be configured via upstart in /etc/init/oracle-ohasd.conf, however, the process /etc/init.d/init.ohasd run should still be up. Oracle Linux 7 (and Red Hat Linux 7) uses systemd to manage start/stop services (example: /etc/systemd/system/oracle-ohasd.service)

- Oracle Clusterware is started by the OS init daemon calling the /etc/init.d/init.ohasd startup script. - On RHEL 6, Clusterware startup is controlled by Upstart via the /etc/init/oracle-ohasd.conf file.

# cat /etc/init/oracle-ohasd.conf

# Oracle OHASD startup

start on runlevel [35]

stop on runlevel [!35]

respawn

exec /etc/init.d/init.ohasd run >/dev/null 2>&1 </dev/null

- On RHEL 7, Clusterware startup is controlled by systemd to manage start/stop services (example: /etc/system/system/oracle-ohasd.service).

GPnP Architecture: Overview

GPnP Service

The GPnP service is collectively provided by all the GPnP agents. It is a distributed method of replicating profiles. The service is instantiated on each node in the domain as a GPnP agent. The service is peer-topeer; there is no master process. This allows high availability because any GPnP agent can crash and new nodes will still be serviced. GPnP requires standard IP multicast protocol (provided by mDNS), to locate peer services. Using multicast discovery, GPnP locates peers without configuration. This is how a GPnP agent on a new node locates another agent that may have a profile it should use.

Name Resolution

A name defined within a GPnP domain is resolvable in the following cases:

-

Hosts inside the GPnP domain use normal DNS to resolve the names of hosts outside of the GPnP domain. They contact the regular DNS service and proceed. They may get the address of the DNS server by global configuration or by having been told by DHCP.

-

Within the GPnP domain, host names are resolved by using mDNS. This requires an mDNS responder on each node that knows the names and addresses used by this node, and operating system client library support for name resolution using this multicast protocol. Given a name, a client executes gethostbyname, resulting in an mDNS query. If the name exists, the responder on the node that owns the name will respond with the IP address. The client software may cache the resolution for the given time-to-live value.

-

Machines outside the GPnP domain cannot resolve names in the GPnP domain by using multicast. To resolve these names, they use their regular DNS. The provisioning authority arranges the global DNS to delegate a subdomain (zone) to a known address that is in the GPnP domain. GPnP creates a service called GNS to resolve the GPnP names on that fixed address. The node on which the GNS server is running listens for DNS requests. On receipt, they translate and forward to mDNS, collect responses, translate, and send back to the outside client. GNS is “virtual” because it is stateless. Any node in the multicast domain may host the server. The only GNS configuration is global:

- The address on which to listen on standard DNS port 53

- The names of the domains to serviced

There may be as many GNS entities as needed for availability reasons. Oracle-provided GNS may use CRS to ensure the availability of a single GNS provider.

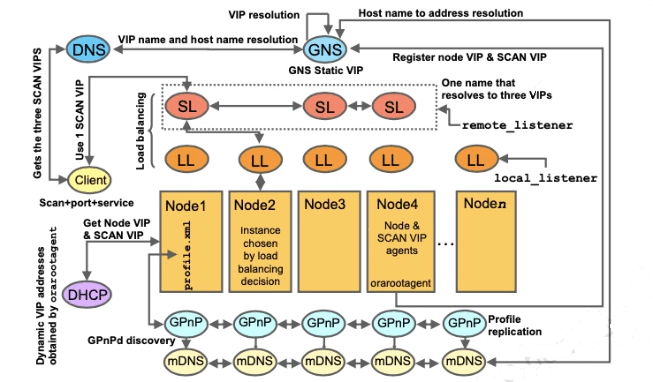

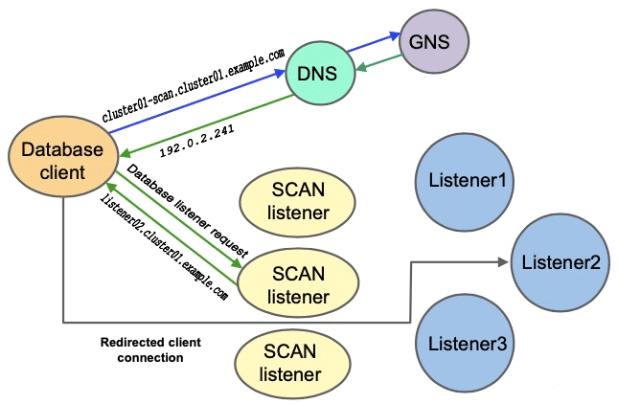

SCAN and Local Listeners

When a client submits a connection request, the SCAN listener listening on a SCAN IP address and the SCAN port are contacted on the client’s behalf. Because all services on the cluster are registered with the SCAN listener, the SCAN listener replies with the address of the local listener on the least-loaded node where the service is currently being offered. Finally, the client establishes a connection to the service through the listener on the node where service is offered. All these actions take place transparently to the client without any explicit configuration required in the client.

During installation, listeners are created on nodes for the SCAN IP addresses. Oracle Net Services routes application requests to the least loaded instance providing the service. Because the SCAN addresses resolve to the cluster, rather than to a node address in the cluster, nodes can be added to or removed from the cluster without affecting the SCAN address configuration.

Static Configuration

With static configurations, no subdomain is delegated. A DNS administrator configures the GNS VIP to resolve to a name and address configured on the DNS, and a DNS administrator configures a SCAN name to resolve to three static addresses for the cluster. A DNS administrator also configures a static public IP name and address, and virtual IP name and address for each cluster member node. A DNS administrator must also configure new public and virtual IP names and addresses for each node added to the cluster. All names and addresses are resolved by DNS.

How GPnP Works: Cluster Node Startup

When a node is started in a GPnP environment:

- Network addresses are negotiated for all interfaces using DHCP

- The Clusterware software on the starting node starts a GPnP agent

- The GPnP agent on the starting node gets its profile locally or uses resource discovery (RD) to discover the peer GPnP agents in the grid. If RD is used, it gets the profile from one of the GPnP peers that responds. The GPnP agent acquires the desired network configuration from the profile. This includes creation of reasonable host names. If there are static configurations, they are used in preference to the dynamic mechanisms. Network interfaces may be reconfigured to match the profile requirements.

- Shared storage is configured to match the profile requirements.

- System and service startup is done as configured in the image. In the case of RAC, the CSS and CRS systems will then be started, which will form the cluster and bring up appropriate database instances. The startup of services may run down their own placeholder values, or may dynamically negotiate values rather than rely on fixed-up configurations. One of the services likely to be started somewhere is the GNS system for external name resolution. Another of the services likely to be started is an Oracle SCAN listener.

Grid Naming Service (GNS)

Employing Grid Naming Service (GNS) assumes that there is a DHCP server running on the public network with enough addresses to assign to the VIPs and single-client access name (SCAN) VIPs. With GNS, only one static IP address is required for the cluster, the GNS virtual IP address. This address should be defined in the DNS domain. GNS sets up a multicast DNS (mDNS) server within the cluster, which resolves names in the cluster without static configuration of the DNS server for other node IP addresses.

The mDNS server works as follows: Within GNS, node names are resolved by using link-local multicast name resolution (LLMNR). It does this by translating the LLMNR “.local” domain used by the multicast resolution to the subdomain specified in the DNS query. When you select GNS, an mDNS server is configured on each host in the cluster. LLMNR relies on the mDNS that Oracle Clusterware manages to resolve names that are being served by that host.

To use GNS, before installation, the DNS administrator must establish domain delegation to the subdomain for the cluster. Queries to the cluster are sent to the GNS listener on the GNS virtual IP address. When a request comes to the domain, GNS resolves it by using its internal mDNS and responds to the query.

Single-Client Access Name

The single-client access name (SCAN) is the address used by clients connecting to the cluster. The SCAN is a fully qualified host name (host name + domain) registered to three IP addresses. If you use GNS, and you have DHCP support, then the GNS will assign addresses dynamically to the SCAN.

If you do not use GNS, the SCAN should be defined in the DNS to resolve to the three addresses assigned to that name. This should be done before you install Oracle Grid Infrastructure. The SCAN and its associated IP addresses provide a stable name for clients to use for connections, independent of the nodes that make up the cluster.

SCANs function like a cluster alias. However SCANs are resolved on any node in the cluster, so unlike a VIP address for a node, clients connecting to the SCAN no longer require updated VIP addresses as nodes are added to or removed from the cluster. Because the SCAN addresses resolve to the cluster, rather than to a node address in the cluster, nodes can be added to or removed from the cluster without affecting the SCAN address configuration.

$ nslookup cluster01-scan.cluster01.example.com

Server: 192.0.2.1

Address: 192.0.2.1#53

Non-authoritative answer:

Name: cluster01-scan.cluster01.example.com

Address: 192.0.2.243

Name: cluster01-scan.cluster01.example.com

Address: 192.0.2.244

Name: cluster01-scan.cluster01.example.com

Address: 192.0.2.245

During installation, listeners are created on each node for the SCAN IP addresses. Oracle Clusterware routes application requests to the cluster SCAN to the least loaded instance providing the service. SCAN listeners can run on any node in the cluster. SCANs provide location independence for databases so that the client configuration does not have to depend on which nodes run a particular database.

Instances register with SCAN listeners only as remote listeners. Upgraded databases register with SCAN listeners as remote listeners, and also continue to register with all other listeners. If you specify a GNS domain during installation, the SCAN defaults to clustername-scan.GNS_domain. If a GNS domain is not specified at installation, the SCAN defaults to clusternamescan.current_domain.

Client Database Connections

In a GPnP environment, the database client no longer has to use the TNS address to contact the listener on a target node. Instead, it can use the EZConnect method to connect to the database. When resolving the address listed in the connect string, the DNS will forward the resolution request to the GNS with the SCAN VIP address for the chosen SCAN listener and the name of the database service that is desired. In EZConnect syntax, this would look like:

scan-name.cluster-name.company.com/ServiceName, where the service name might be the database name. The GNS will respond to the DNS server with the IP address matching the name given; this address is then used by the client to contact the SCAN listener. The SCAN listener uses its connection load balancing system to pick an appropriate listener, whose name it returns to the client in an OracleNet Redirect message. The client reconnects to the selected listener, resolving the name through a call to the GNS.

The SCAN listeners must be known to all the database listener nodes and clients. The database instance nodes cross-register only with known SCAN listeners, also sending them per-service connection metrics. The SCAN known to the database servers may be profile data or stored in OCR.

Oracle ASM

Oracle ASM is a volume manager and a file system for Oracle Database files that supports single-instance Oracle Database and Oracle Real Application Clusters (Oracle RAC) configurations. ASM has been specifically engineered to provide the best performance for both single instance and RAC databases. Oracle ASM is Oracle’s recommended storage management solution that provides an alternative to conventional volume managers, file systems, and raw devices.

Combining volume management functions with a file system allows a level of integration and efficiency that would not otherwise be possible. For example, ASM is able to avoid the overhead associated with a conventional file system and achieve native raw disk performance for Oracle data files and other file types supported by ASM. ASM is engineered to operate efficiently in both clustered and nonclustered environments.

Oracle ASM files can coexist with other storage management options such as raw disks and third-party file systems. This capability simplifies the integration of Oracle ASM into pre-existing environments.

Oracle ACFS

Oracle ACFS is a multi-platform, scalable file system, and storage management technology that extends Oracle Automatic Storage Management (Oracle ASM) functionality to support all customer files. Oracle ACFS supports Oracle Database files and application files, including executables, database data files, database trace files, database alert logs, application reports, BFILEs, and configuration files. Other supported files are video, audio, text, images, engineering drawings, and other general-purpose application file data. Oracle ACFS conforms to POSIX standards for Linux and UNIX, and to Windows standards for Windows.

An Oracle ACFS file system communicates with Oracle ASM and is configured with Oracle ASM storage, as shown previously. Oracle ACFS leverages Oracle ASM functionality that enables:

- Oracle ACFS dynamic file system resizing

- Maximized performance through direct access to Oracle ASM disk group storage

- Balanced distribution of Oracle ACFS across Oracle ASM disk group storage for increased I/O parallelism

- Data reliability through Oracle ASM mirroring protection mechanisms

Oracle ACFS is tightly coupled with Oracle Clusterware technology, participating directly in Clusterware cluster membership state transitions and in Oracle Clusterware resource-based high availability (HA) management. In addition, Oracle installation, configuration, verification, and management tools have been updated to support Oracle ACFS.

Oracle Flex ASM

Oracle Flex ASM enables an Oracle ASM instance to run on a separate physical server from the database servers. With this deployment, larger clusters of Oracle ASM instances can support more database clients while reducing the Oracle ASM footprint for the overall system.

With Oracle Flex ASM, you can consolidate all the storage requirements into a single set of disk groups. All these disk groups are mounted by and managed by a small set of Oracle ASM instances running in a single cluster. You can specify the number of Oracle ASM instances with a cardinality setting. The default is three instances.

When using Oracle Flex ASM, you can configure Oracle ASM clients with direct access to storage or the I/Os can be sent through a pool of I/O servers.

A cluster is a set of nodes that provide group membership services. Each cluster has a name that is globally unique. Every cluster has one or more Hub nodes. The Hub nodes have access to Oracle ASM disks. Every cluster has at least one private network and one public network. If the cluster is going to use Oracle ASM for storage, it has at least one Oracle ASM network. A single network can be used as both a private and an Oracle ASM network. For security reasons, an Oracle ASM network should never be public. There can be only one Oracle Flex ASM configuration running within a cluster.

ASM Features and Benefits

ASM provides striping and mirroring without the need to purchase a third-party Logical Volume Manager. ASM divides a file into pieces and spreads them evenly across all the disks. ASM uses an index technique to track the placement of each piece. Traditional striping techniques use mathematical functions to stripe complete logical volumes. ASM is unique in that it applies mirroring on a file basis, rather than on a volume basis. Therefore, the same disk group can contain a combination of files protected by mirroring or not protected at all.

When your storage capacity changes, ASM does not restripe all the data. However, in an online operation, ASM moves data proportional to the amount of storage added or removed to evenly redistribute the files and maintain a balanced I/O load across the disks. You can adjust the speed of rebalance operations to increase or decrease the speed and adjust the impact on the I/O subsystem. This capability also enables the fast resynchronization of disks that may suffer a transient failure.

ASM supports all Oracle database file types. It supports Real Application Clusters (RAC) and eliminates the need for a cluster Logical Volume Manager or a cluster file system. In extended clusters, you can set a preferred read copy.

ASM is included in the Grid Infrastructure installation. It is available for both the Enterprise Edition and Standard Edition installations.

To summarise the features:

- Stripes files rather than logical volumes

- Provides redundancy on a file basis

- Enables online disk reconfiguration and dynamic rebalancing

- Reduces the time significantly to resynchronize a transient failure by tracking changes while the disk is offline

- Provides adjustable rebalancing speed

- Is cluster-aware

- Supports reading from mirrored copy instead of primary copy for extended clusters

- Is automatically installed as part of the Grid Infrastructure